AWS introduces new instance with up to 65 percent better price-performance for compute-intensive HPC workloads, including engineering simulations. Computational fluid dynamics (CFD) is a mainstay of design engineers working across automotive, aerospace, manufacturing and other sectors. Applications such as OpenFOAM, Siemens Simcenter STAR-CCM+, Ansys Fluent, and NASA’s FUN3D and OVERFLOW, all enable engineering teams to test their designs thoroughly.

The convenience and lower relative cost of simulation-based testing compared to physical testing is well-understood. This is why we’re seeing so many organizations seeking to increase the proportion of testing they do via simulation. For this to happen, increasingly sophisticated models need to be used.

Increasing Headaches for Design Teams

CFD simulations are incredibly compute-intensive, demanding specialist high-performance computing (HPC) infrastructure to run. And the more complex the model, the larger it is and the more computing power it needs to run.

Many organizations operate dedicated on-premises HPC clusters for this purpose. However, this infrastructure is by its very nature capacity-constrained. This means designers are increasingly unable to run the sizes of simulations they’d like to, and must instead continue to rely on more physical testing than would otherwise be required. Additionally, having to share a constrained resource pool can mean engineering teams having to wait their turn for access, which extends product development timelines.

Some have begun moving these workloads to the cloud, to benefit from on-demand access to virtually limitless capacity. However, as these workloads scale to tens of thousands of cores, cost-efficiency becomes increasingly important.

Up to 65 Percent Better Price-Performance

To help engineering teams run the larger, more-detailed and more-accurate models they aspire to—and to do so cost-effectively—AWS has created a purpose-built HPC instance, known as Hpc6a.

Hpc6a runs on the latest, third-generation AMD EPYC processors, and efficiently scales to create very large clusters within a single AWS Availability Zone. Elastic Fabric Adapter (EFA) provides the fast, 100 Gbps inter-instance networking required by tightly coupled HPC workloads, while the AWS Nitro System offloads the hypervisor onto dedicated hardware, meaning all cores are available to the workload. Engineers can use AWS ParallelCluster to provision Hpc6a instances alongside other AWS instance types in the same cluster, enabling them to run different workloads optimized for different instance types.

Over-and-above the ability to run virtually any scale of simulation on-demand, Hpc6a delivers up to 65 percent better price-performance over comparable x86-based compute-optimized Amazon Elastic Compute Cloud (EC2) instances, such as those typically used by customers for CFD workloads today.

Benchmarking AWS Hpc6a

For any organization looking to run large or very large simulations cost-effectively, efficient, linear scaling is essential. Simply put, when you double the core count, you need performance to double as well.

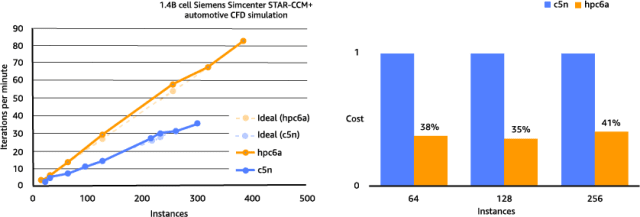

To test the scaling efficiency and price-performance of Hpc6a—and compare this to the C5n, which is a compute- and network-optimized Amazon EC2 instance commonly used for CFD simulations today—we benchmarked a number of CFD applications.

We ran models of varying sizes, starting at around 100-200 million computational cells, which is the scale at which many engineering teams are currently running CFD simulations. We also ran a 1.4-billion-cell simulation using Siemens Simcenter STAR-CCM+ with automotive data from the DrivAer model, to demonstrate the opportunity for engineers to immediately and significantly expand the scale of their simulations. Our STAR-CCM+ test went up to nearly 400 nodes (approximately 40,000 cores), and demonstrated that even at this size, scaling-efficiency remains around 100 percent. The graph in figure 1 shows this near-linear increase in performance as the instance count grows. It also shows the relative cost of Hpc6a compared to C5n.

Figure 1. Benchmark results for Hpc6a on Siemens Simcenter STAR-CCM+, compared to the C5n instance, which is a compute-and network-optimized Amazon EC2 instance commonly used for CFD workloads. (Image courtesy of AWS.)

Software Licensing Benefits

By maintaining this level of scaling efficiency, even in very large clusters, Hpc6a enables engineers to run the same simulation on approximately 40,000 cores in a tenth of the time it would take on 4,000 cores, with more-or-less the same infrastructure costs. As well as delivering much-faster results, this can lead to significant software cost savings.

For example, some CFD applications calculate license costs purely on the number of hours the software is used. Running the same simulation in a tenth of the time therefore means a tenth of the software costs.

Elsewhere, where licensing is based on core counts, some application providers price in bands. It may be possible to use more cores within the same band at no additional cost, or to move up to the next band and use significantly more cores, with only a marginal cost increase.

Real-World Impacts

So, what does all of this mean in terms of real-world impact? Firstly, by freeing themselves from the constraints of on-premises HPC resources, engineering teams will be able to get results faster, and at more convenient times, thereby making it easier to meet project deadlines. Secondly, the ability to run more-sophisticated simulations in a cost-effective way can drive up certainty in the results, and thereby help reduce the amount of physical testing required. These factors combine to help reduce the overall cost and duration of product development cycles, resulting in faster times to market and more-competitive product pricing.

Get Started Today

Hpc6a is available now for both US East (Ohio) and AWS GovCloud (West) Regions. Pricing can be either On-Demand, or as part of a flexible Savings Plan. To learn more, go to aws.amazon.com and also visit the dedicated CFD page.